Introduction

Understanding the significance of differences in statistical measures such as means, variances, and correlation coefficients is essential in psychology and other behavioral sciences. These differences help researchers determine whether observed patterns in data reflect genuine relationships or are due to random variation.

Statistical inference provides the framework for deciding whether the differences observed in sample data are reflective of actual differences in the population. This is crucial when comparing groups, assessing variability, or exploring associations. By evaluating the significance of differences in means, variances, and correlation coefficients, researchers test hypotheses, make informed conclusions, and guide future research directions.

Read More- Percentile and Percentile Rank

Significance of Differences in Means

Comparing group means is one of the most common statistical tasks. For example, a psychologist might compare the mean test scores of students who received a specific teaching method against those who did not. The question is whether the observed difference in means reflects a real effect or occurred by chance.

1. Independent Samples t-Test

Used when comparing means from two independent groups, such as experimental vs. control. This test evaluates whether the difference in sample means is statistically significant, assuming equal variances and normal distribution.

Assumptions:

- The two samples are independent

- The data are approximately normally distributed

- The variances in both groups are equal

2. Paired Samples t-Test

Used for comparing two related means, such as pre- and post-treatment scores from the same individuals. It analyzes the mean of the differences within paired observations.

3. Analysis of Variance (ANOVA)

When comparing more than two means, ANOVA is appropriate. It partitions variance into between-group and within-group components. A significant F-value indicates that at least one group mean significantly differs from the others.

Analysis of Variance

4. Welch’s t-Test

When the assumption of equal variances between groups is violated, Welch’s t-test serves as a more robust alternative to the independent samples t-test. It adjusts the degrees of freedom and provides a more accurate Type I error rate when sample sizes or variances are unequal.

5. Mann–Whitney U Test (Wilcoxon Rank-Sum Test)

A non-parametric alternative to the independent samples t-test, this test compares two independent groups without assuming normal distribution. It is ideal for ordinal data or when distributions are skewed.

6. Wilcoxon Signed-Rank Test

A non-parametric counterpart to the paired t-test, this test is used when comparing two related samples with ordinal data or non-normal distribution.

7. Kruskal–Wallis Test

This test is the non-parametric alternative to one-way ANOVA. It is used for comparing more than two independent groups when data are ordinal or not normally distributed. Post-hoc pairwise comparisons may follow a significant result.

Significance of Differences in Variances

Variance measures the dispersion of scores. Differences in variance can indicate differing levels of consistency or reliability across conditions. In research, it’s important to evaluate if groups differ not only in means but also in the spread of scores.

Variance

Types of Variance

- Population Variance (σ²): Reflects the true variability in a population.

- Sample Variance (s²): An estimate of the population variance based on sample data.

- Between-Group Variance: Used in ANOVA to detect variability between group means.

- Within-Group Variance: Captures variability among individuals in the same group.

- Pooled Variance: A weighted average of variances from two or more groups, often used in t-tests when variances are assumed equal.

F-Test for Variances

Used to compare two sample variances by calculating the ratio of the larger variance to the smaller. This test assumes that the data in each group are normally distributed.

This test is sensitive to deviations from normality, and violations can lead to misleading conclusions.

Levene’s Test

Levene’s test is a more robust alternative to the F-test. It assesses the equality of variances by analyzing the absolute deviations from group means. It is less sensitive to non-normal distributions and is commonly used before t-tests or ANOVA.

Additional Measures of Dispersion

Besides variance and standard deviation, researchers use various metrics to understand data spread:

- Standard Deviation (SD): The square root of the variance; it represents the average distance from the mean.

- Range: The difference between the highest and lowest values. It is simple but sensitive to outliers.

- Interquartile Range (IQR): The difference between the 75th percentile (Q3) and 25th percentile (Q1). It captures the middle 50% of the data and is resistant to outliers.

- Quartile Deviation: Half of the IQR. Also known as the semi-interquartile range, it provides a concise measure of spread around the median.

- Coefficient of Variation (CV): The ratio of the standard deviation to the mean, expressed as a percentage. It is useful for comparing variability across variables with different units.

- Root Mean Square (RMS): A quadratic mean often used in signal analysis, reflecting both the magnitude and variability of values.

These measures allow researchers to choose appropriate tests, interpret results meaningfully, and report data dispersion accurately.

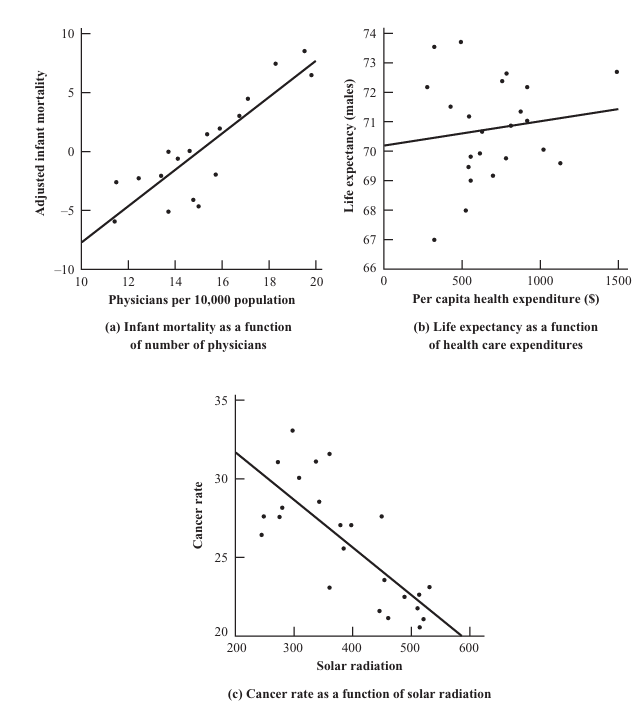

Significance of Correlation Coefficients

Pearson’s Correlation Coefficient

Pearson’s r measures the strength and direction of a linear relationship between two variables. The value ranges from -1 to +1, where values close to 0 imply weak or no linear association, and values close to ±1 imply strong association.

Positive, Neutral, and Negative Relationship

Interpreting Significance and Effect Sizes

A p-value indicates the probability of observing the data assuming the null hypothesis is true. Researchers typically use α = 0.05 as a cutoff. A p-value below this threshold leads to rejecting the null hypothesis, suggesting a statistically significant effect.

Statistical significance does not always equate to practical significance. Effect size measures quantify the magnitude of an observed effect:

- Cohen’s d: Measures the standardized difference between two means.

- Eta squared (η²): Used in ANOVA to represent the proportion of total variance explained by group membership.

- r squared (r²): Represents the proportion of shared variance in a correlation.

Confidence intervals offer a range within which the population parameter is likely to lie. Unlike p-values, they provide information about the precision and potential variability of the estimate.

Assumptions Underlying Significance Tests

Inferential statistical tests rely on several core assumptions:

- Normality: Data are normally distributed within each group.

- Homogeneity of Variance: The variance across groups is equal.

- Independence: Observations are independent of each other.

Violations of these assumptions can lead to inflated Type I or Type II error rates. Remedies include data transformations (e.g., log, square root), using non-parametric alternatives, or resampling techniques such as bootstrapping.

Multiple Testing and Error Control

Conducting multiple tests increases the chance of Type I errors. Several correction methods exist to control for this:

- Bonferroni Correction: Adjusts the significance threshold by dividing α by the number of comparisons.

- Holm-Bonferroni Method: A sequentially adjusted method that is less conservative than Bonferroni.

- False Discovery Rate (FDR): Controls the expected proportion of false positives among rejected hypotheses.

These methods help maintain the overall validity of statistical conclusions in studies involving multiple comparisons.

Conclusion

Assessing the significance of differences in means, variances, and correlations is foundational to scientific inquiry in psychology and related fields. These methods allow researchers to differentiate real effects from random variation, ensuring that data-driven conclusions are statistically valid and theoretically meaningful. Complementing significance tests with robust effect size measures and confidence intervals enriches the interpretation and impact of findings. Careful attention to test assumptions, multiple comparisons, and reporting standards ensures the reliability and transparency of psychological research.

References

Howell, D. C. (2009). Statistical Methods for Psychology (7th ed.). Wadsworth Cengage Learning.

Field, A. (2013). Discovering Statistics Using IBM SPSS Statistics (4th ed.). Sage Publications.

Cohen, J. (1988). Statistical Power Analysis for the Behavioral Sciences (2nd ed.). Lawrence Erlbaum Associates.

American Psychological Association. (2020). Publication Manual of the American Psychological Association (7th ed.).

Niwlikar, B. A. (2025, July 7). 3 Important Significance of Differences- Means, Variances, and Correlation Coefficients. Careershodh. https://www.careershodh.com/3-important-significance-of-differences/